Building Intelligent Agents: How to Implement LLM Workflows with the Model Context Protocol

"Unlocking the Future of AI: Seamless Integration and Optimization in Next-Gen Systems"

In the past year, we've witnessed an explosion of applications leveraging Large Language Models (LLMs) like Claude and GPT-4. However, many of these applications follow a simplistic pattern: send a prompt, get a response. While this approach works for basic use cases, it quickly breaks down when building more sophisticated AI applications that need to interact with external systems, process complex inputs, or perform multi-step reasoning.

Today, I want to introduce you to a more structured approach for building intelligent agents with LLMs: the Model Context Protocol (MCP) and agent workflow patterns. These architectural patterns have emerged from working of dozens of teams building production LLM applications, and they provide a foundation for creating more capable, secure, and maintainable AI systems.

The Problem with Simple Prompting

Let's start by understanding why we need more sophisticated architectures for LLM applications. Consider a customer support agent that needs to:

Access customer information from a database

Search for relevant articles in a knowledge base

Update ticket statuses in a support system

Generate personalized responses for different types of queries

Trying to accomplish all of this with a single, large prompt quickly becomes unwieldy. You'd need to inject sensitive customer data into the prompt, define complex instructions for different scenarios, and trust that the model will always interpret your instructions correctly.

This approach creates several problems:

Security risks: Sensitive data is exposed directly to the LLM

Limited control: You have little control over what actions the LLM takes

Poor maintainability: Changes to business logic require rewriting complex prompts

Unreliable execution: The LLM might misinterpret instructions for complex workflows

The Model Context Protocol: A Better Foundation

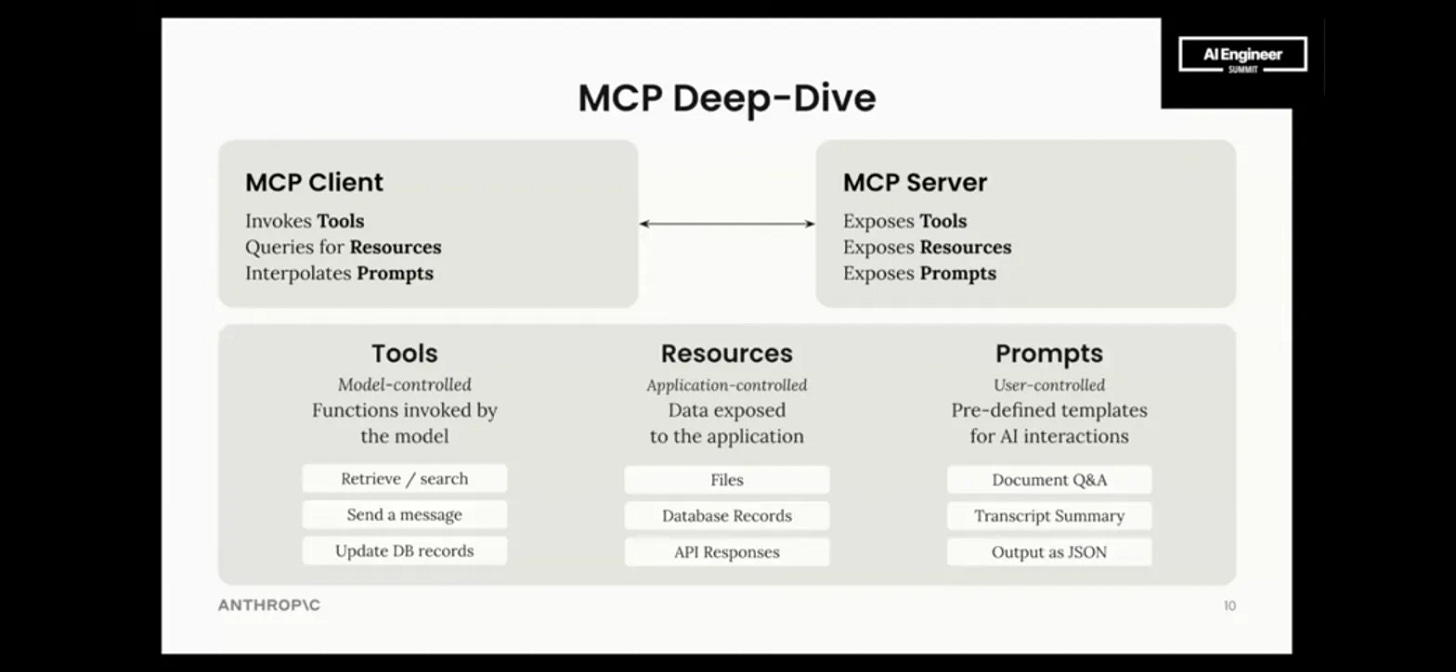

The Model Context Protocol (MCP) addresses these issues by clearly separating three key components of AI applications:

Tools: Functions that the LLM can call to perform actions in the world

Resources: Data sources that the application can access

Prompts: Templates that structure how users interact with the AI

This separation creates a more secure, maintainable, and governed architecture. Let me explain each component in more detail.

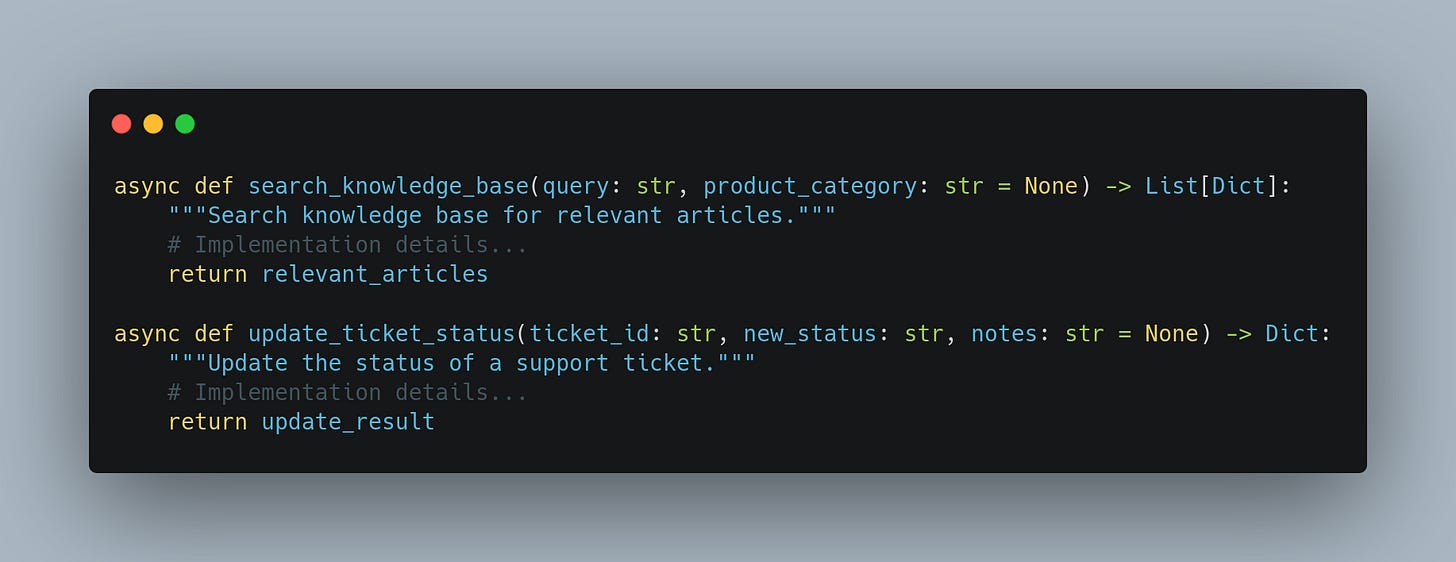

Tools: Model-Controlled Functions

Tools are functions that the LLM can invoke to perform specific actions. They have well-defined inputs and outputs, and they're called based on the model's decisions. For example, a customer support agent might have tools for:

By defining these as explicit tools rather than instructions in a prompt, we gain several advantages:

We can implement proper error handling and validation

We can log tool usage for auditing purposes

We can update tool implementations without changing prompts

The LLM's role is focused on deciding which tools to use rather than how to implement them

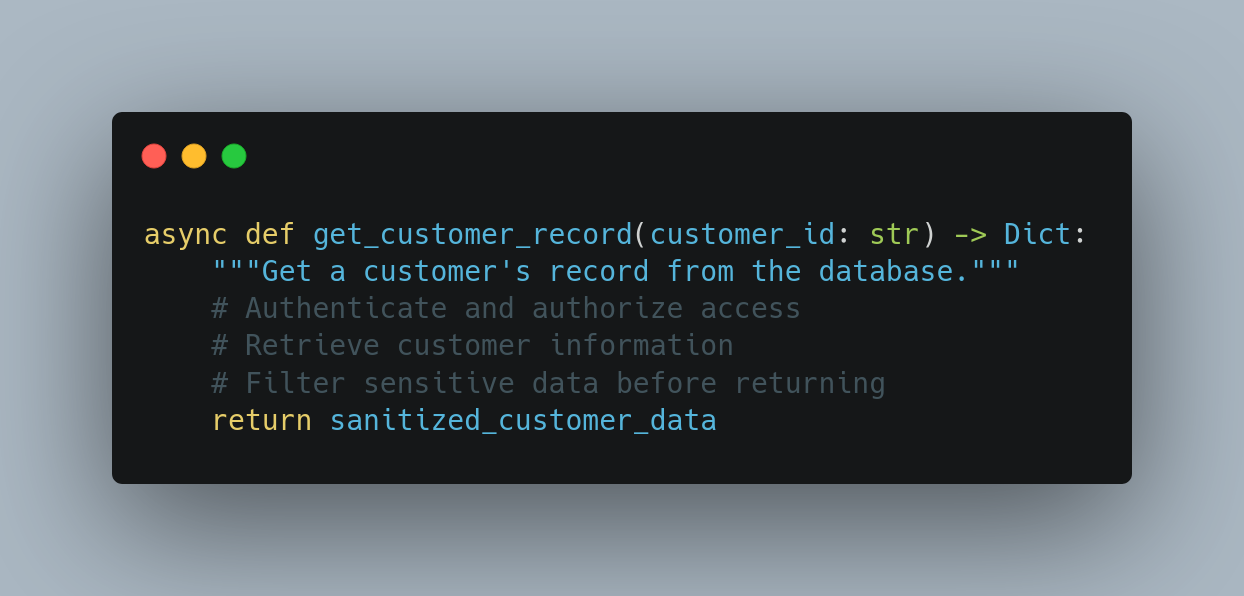

Resources: Application-Controlled Data

Resources are data sources that the application controls. They provide secure access to application data with proper authentication and filtering of sensitive information. For example:

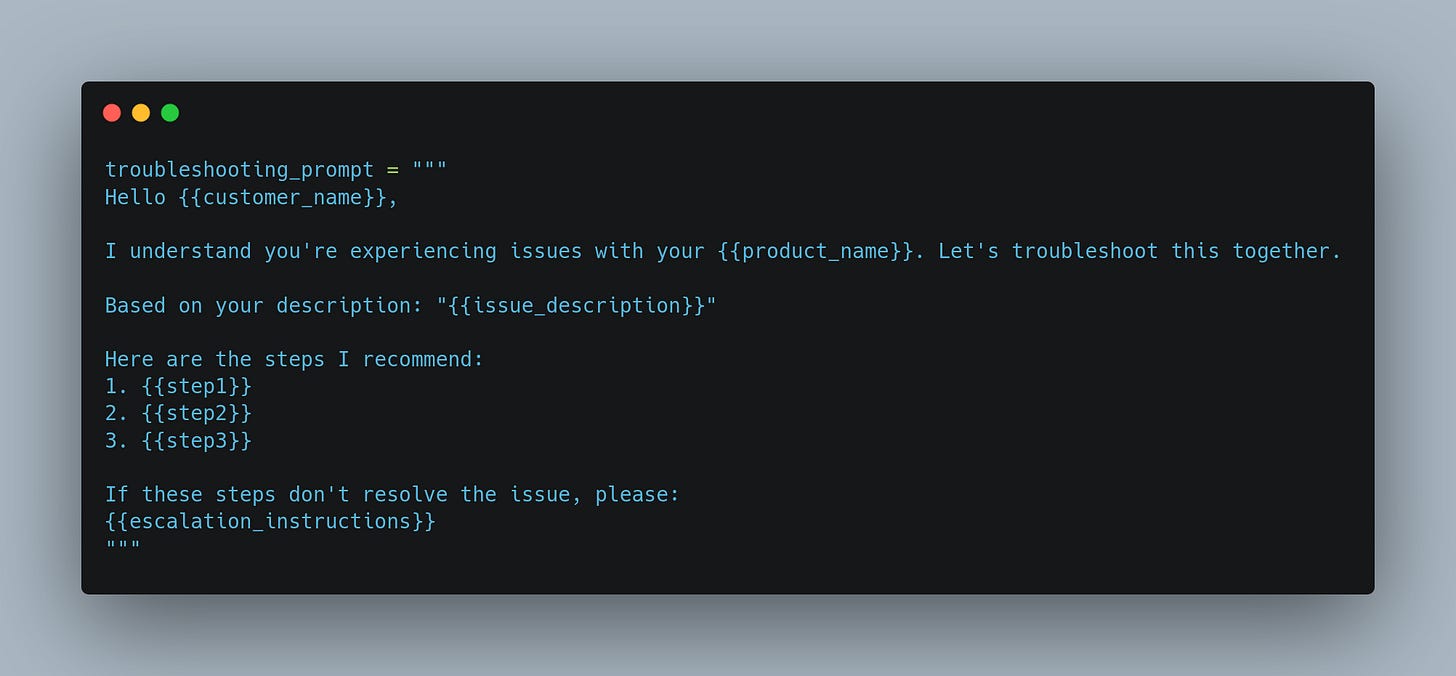

Prompts: User-Controlled Templates

Prompts are templates that structure how users interact with the LLM. They include placeholders for dynamic content and ensure consistent AI responses. For example:

By using prompt templates, we:

Ensure consistent tone and formatting

Easily update response formats without changing business logic

Separate content from logic

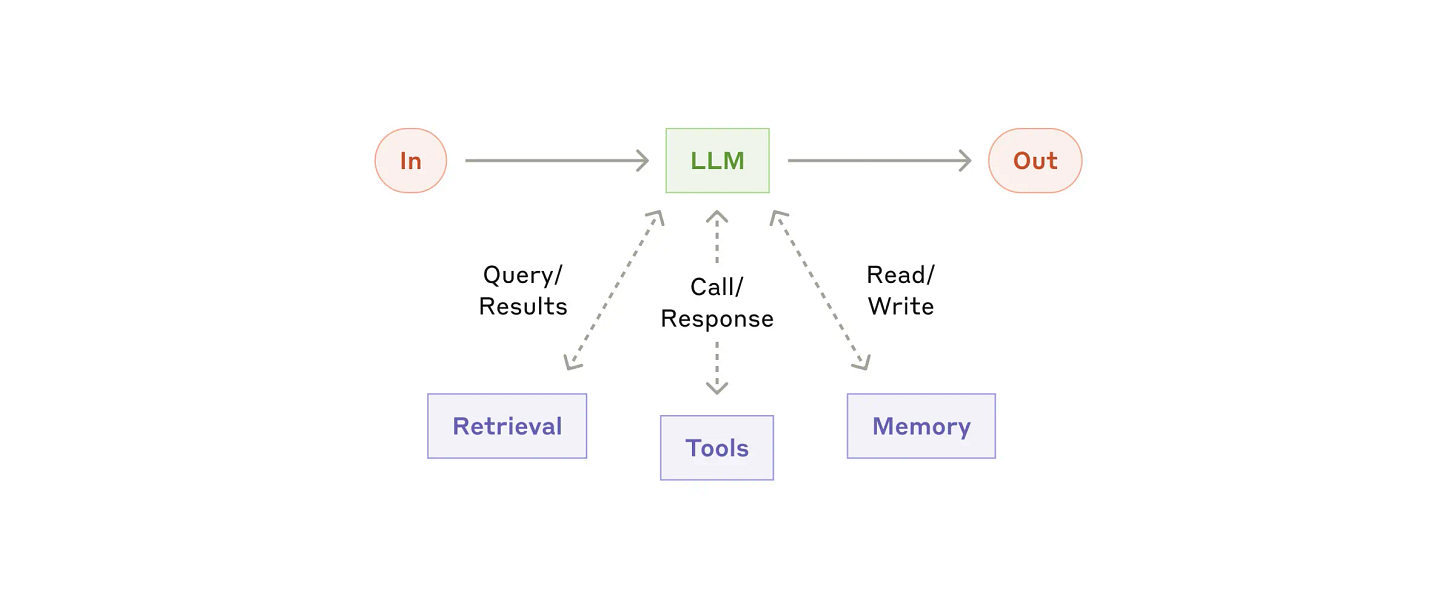

Agent Workflow Patterns

With MCP as our foundation, we can now implement more sophisticated agent workflow patterns. These patterns represent different ways of orchestrating LLMs to solve complex problems. Let's explore some of the most effective patterns.

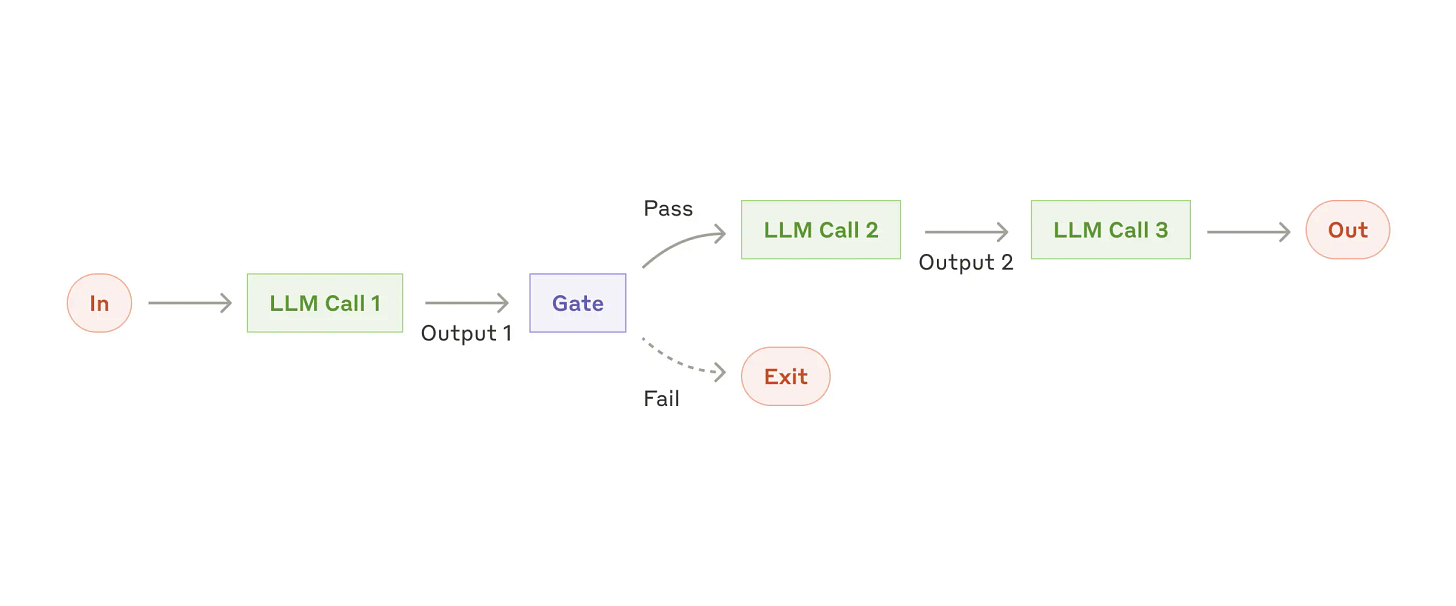

Pattern 1: Prompt Chaining

The Prompt Chaining pattern breaks a complex task into a sequence of simpler subtasks, with each LLM call processing the output of the previous one. This is like an assembly line for thought, where each station adds value to the final product.

For example, to write a high-quality product description, we might chain:

Research key features and benefits

Create an outline with key selling points

Write an initial draft based on the outline

Refine the draft for clarity and persuasiveness

By breaking the task into sequential steps, we often achieve higher quality results than attempting to generate everything in one go. Each step can focus on a specific aspect of the task, allowing the LLM to dedicate its full attention to that component.

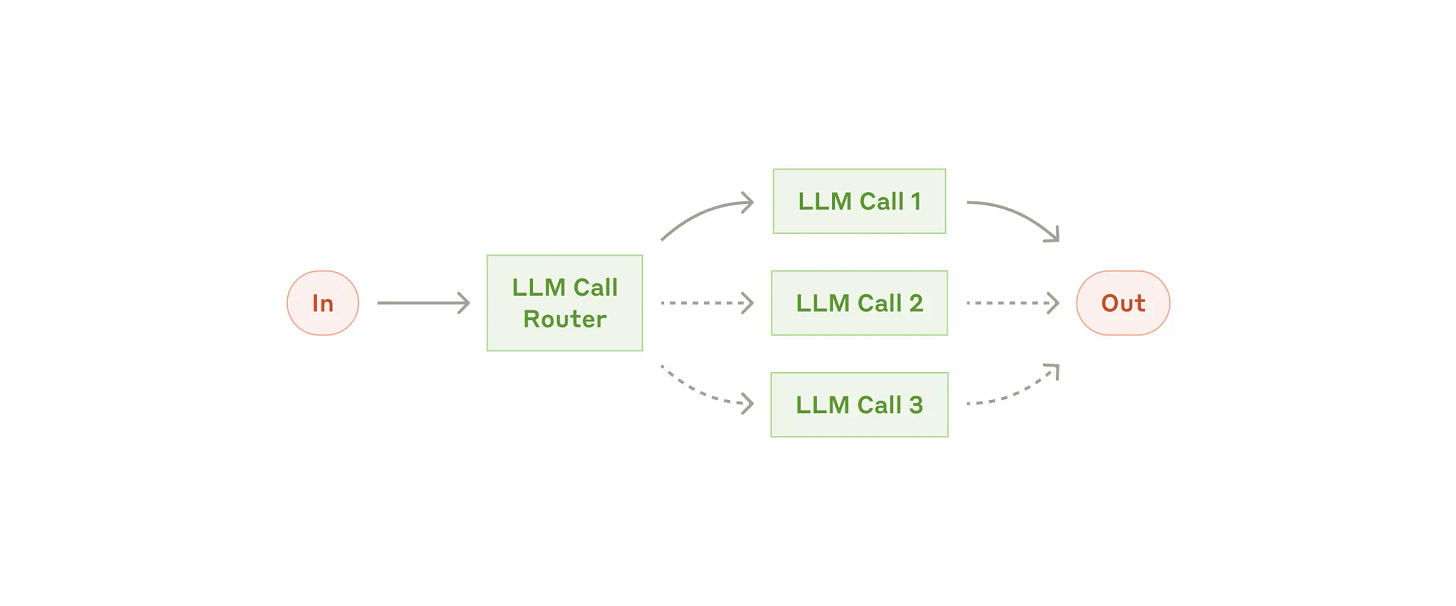

Pattern 2: Routing

The Routing pattern classifies an input and directs it to specialized handlers based on the classification. It's like a receptionist who routes calls to the appropriate department.

For a customer support system, we might route queries into categories like:

Technical troubleshooting

Account management

Billing questions

Return requests

Each category has a specialized handler optimized for that type of query. This leads to better responses than a one-size-fits-all approach.

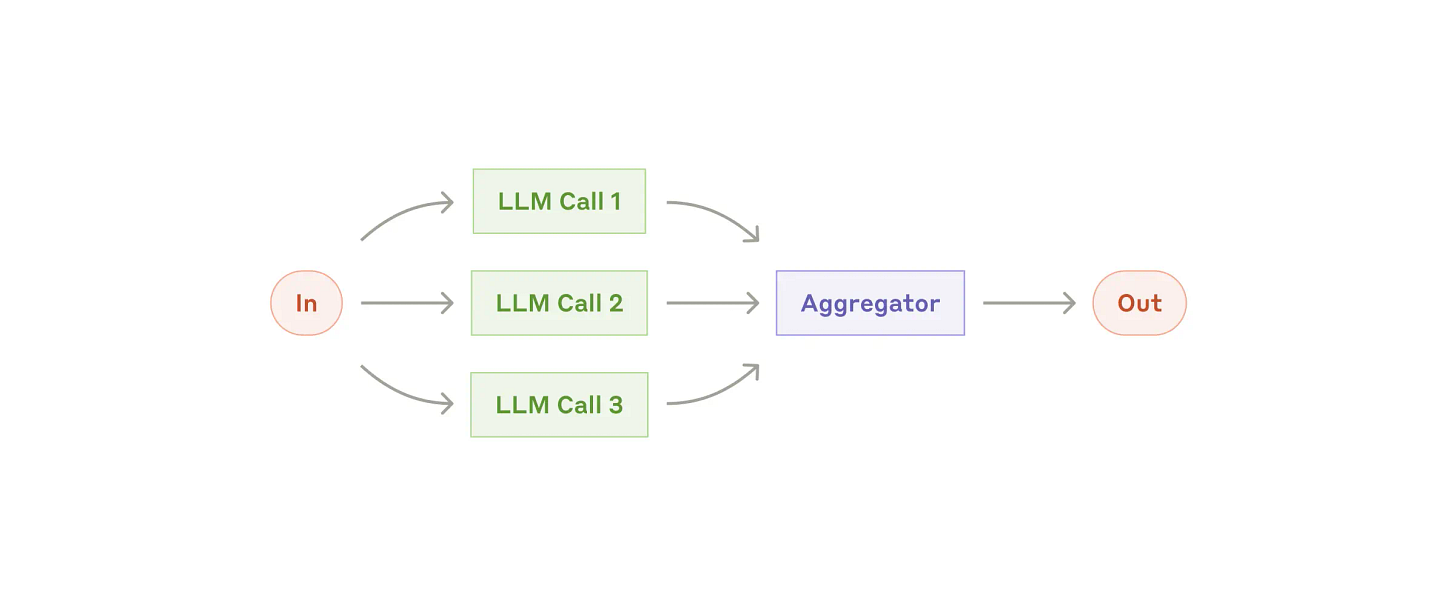

Pattern 3: Parallelization

The Parallelization pattern runs multiple LLM calls in parallel and aggregates their results. It has two main variants:

Sectioning: Breaking a task into independent subtasks (like having different experts analyze different aspects of a problem)

Voting: Running the same task multiple times to get consensus (like getting multiple medical opinions)

For example, when analyzing a product review, we might simultaneously evaluate sentiment, identify key issues, and extract product suggestions, then combine these insights into a comprehensive analysis.

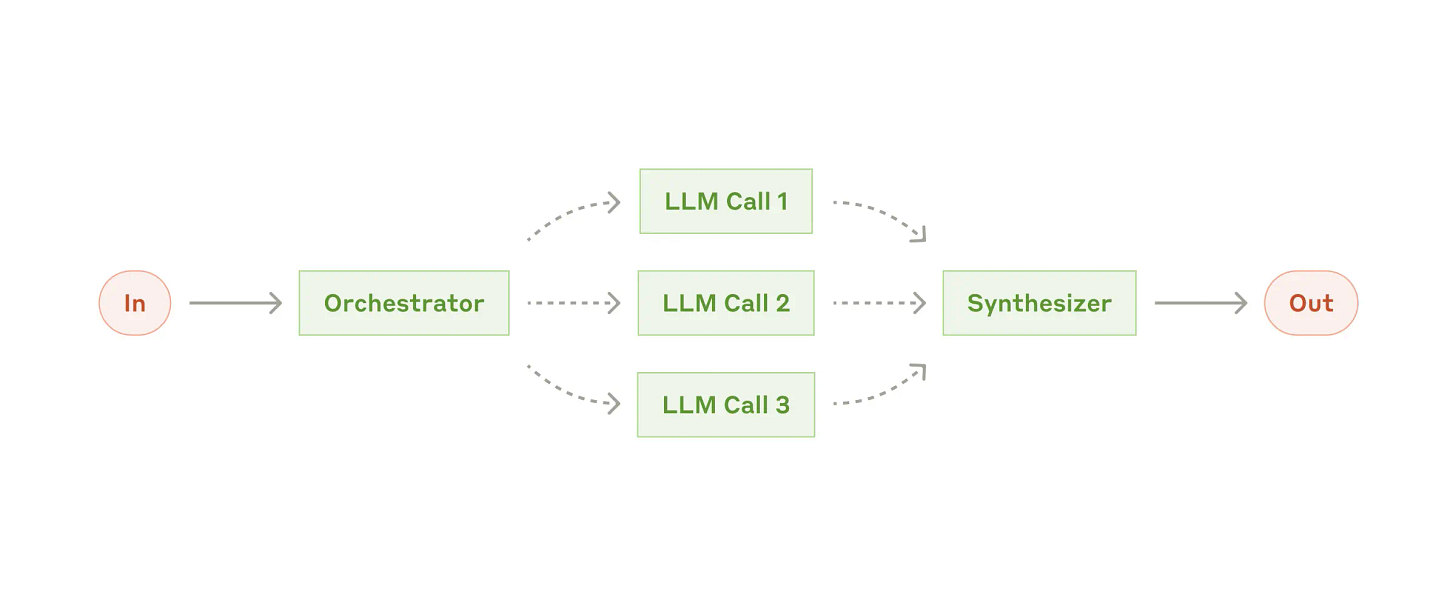

Pattern 4: Orchestrator-Workers

The Orchestrator-Workers pattern uses a central LLM to dynamically break down tasks, delegate them to worker LLMs, and synthesize their results. It's like a project manager who divides a project into tasks, assigns them to team members, and integrates their work into a cohesive deliverable.

This pattern is particularly effective for complex tasks where the subtasks can't be predetermined. For instance, writing a business plan might require market analysis, financial projections, marketing strategy, and operational planning—all delegated to specialized workers and then synthesized by the orchestrator.

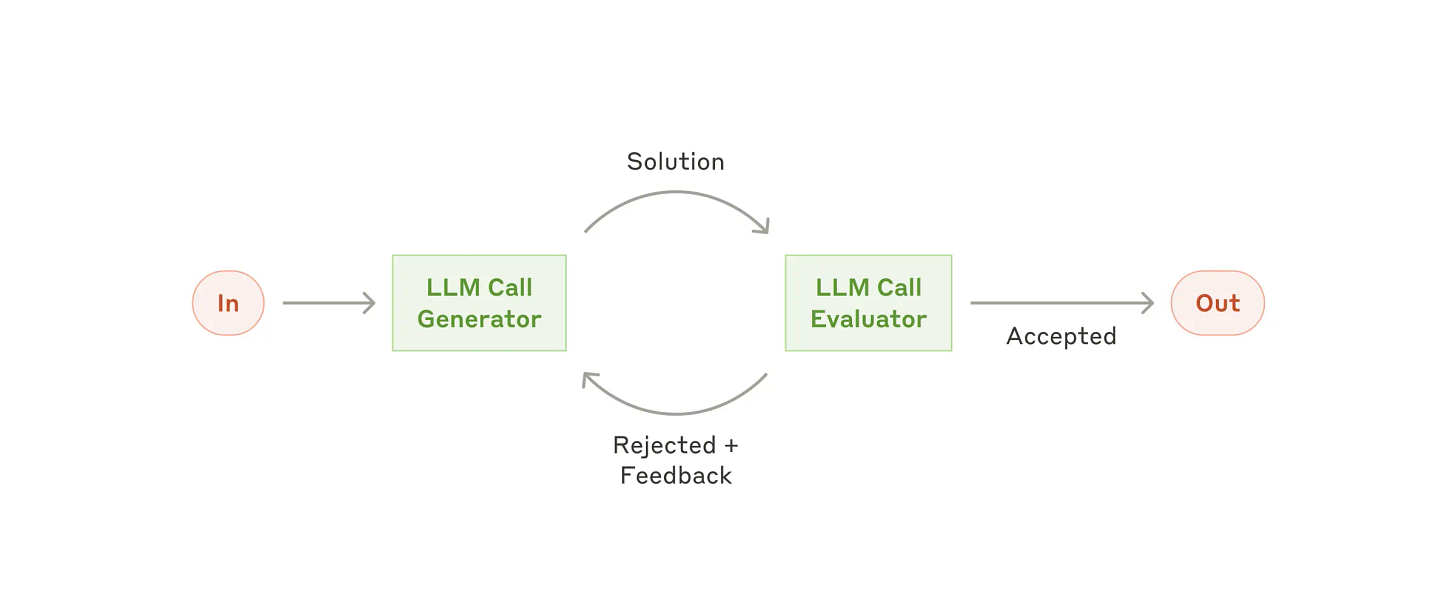

Pattern 5: Evaluator-Optimizer

The Evaluator-Optimizer pattern uses one LLM to generate solutions and another to evaluate and provide feedback in an iterative loop. It's like having a writer and an editor working together to refine a piece of content.

This pattern creates a feedback loop that can significantly improve output quality. For example, to craft a persuasive email:

The optimizer generates an initial draft

The evaluator assesses it against criteria like clarity, persuasiveness, and tone

The optimizer revises based on the feedback

This cycle continues until reaching a quality threshold

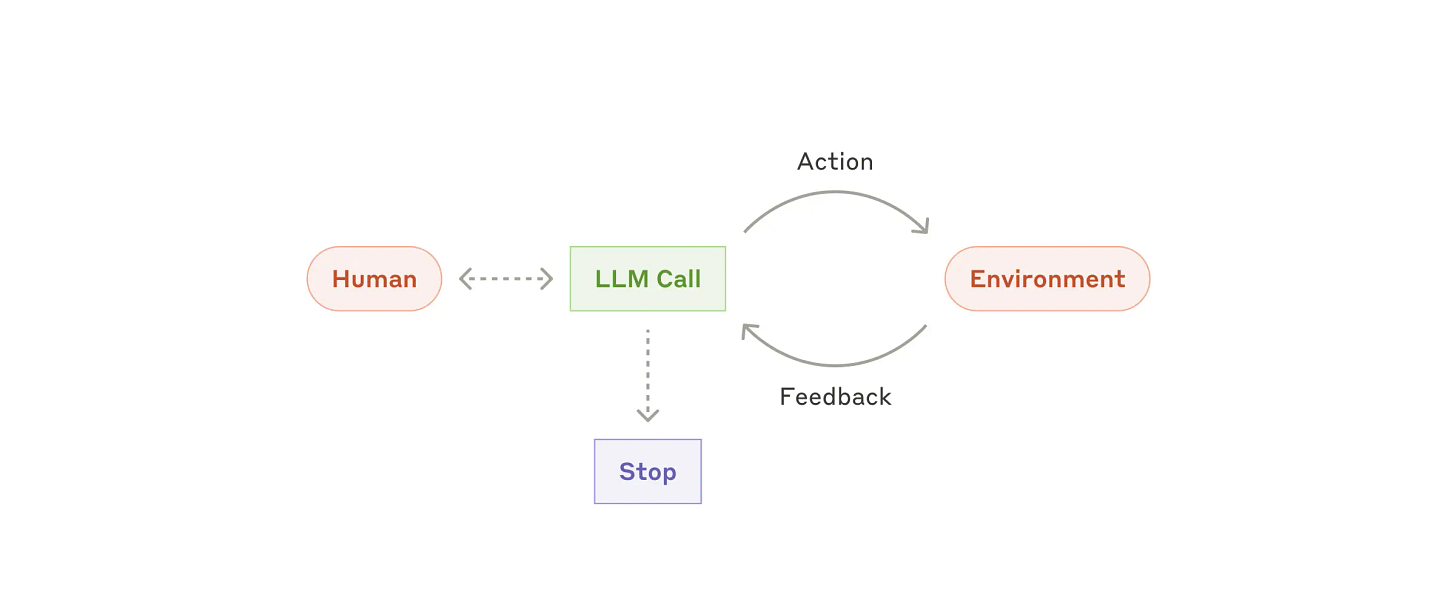

Pattern 6: Autonomous Agent

The Autonomous Agent pattern creates a fully self-directed agent that can plan, execute, and reflect on actions to accomplish complex tasks. It's like having an independent contractor who figures out what needs to be done and does it with minimal supervision.

This is the most flexible but also the most complex pattern. The agent typically goes through cycles of:

Analyzing the task and creating a plan

Executing steps in the plan, using tools as needed

Observing the results of its actions

Adapting its plan based on feedback

Continuing execution until the task is complete

This pattern is particularly powerful for open-ended tasks where the solution path isn't clear upfront.

Building a Real-World Application

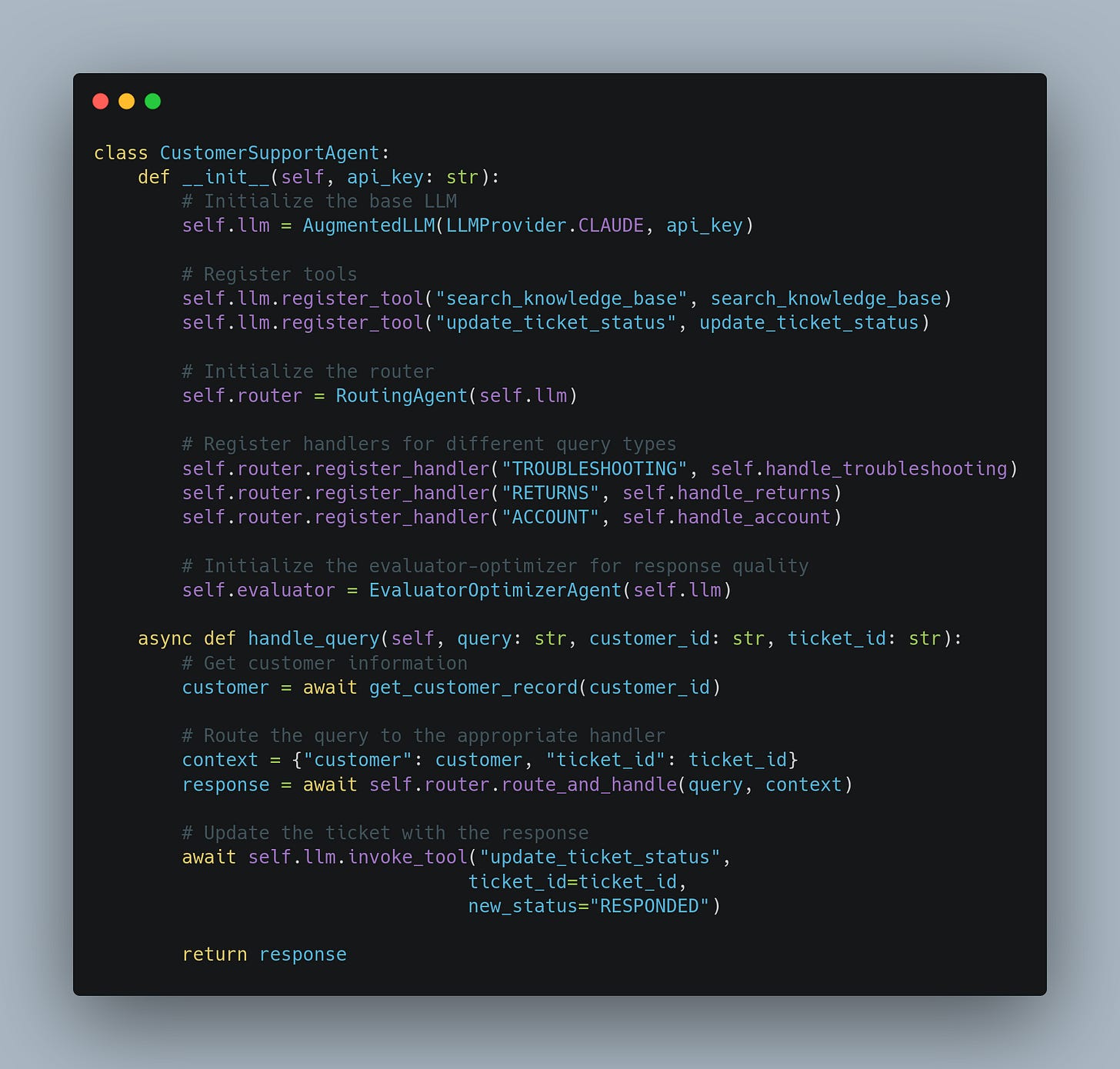

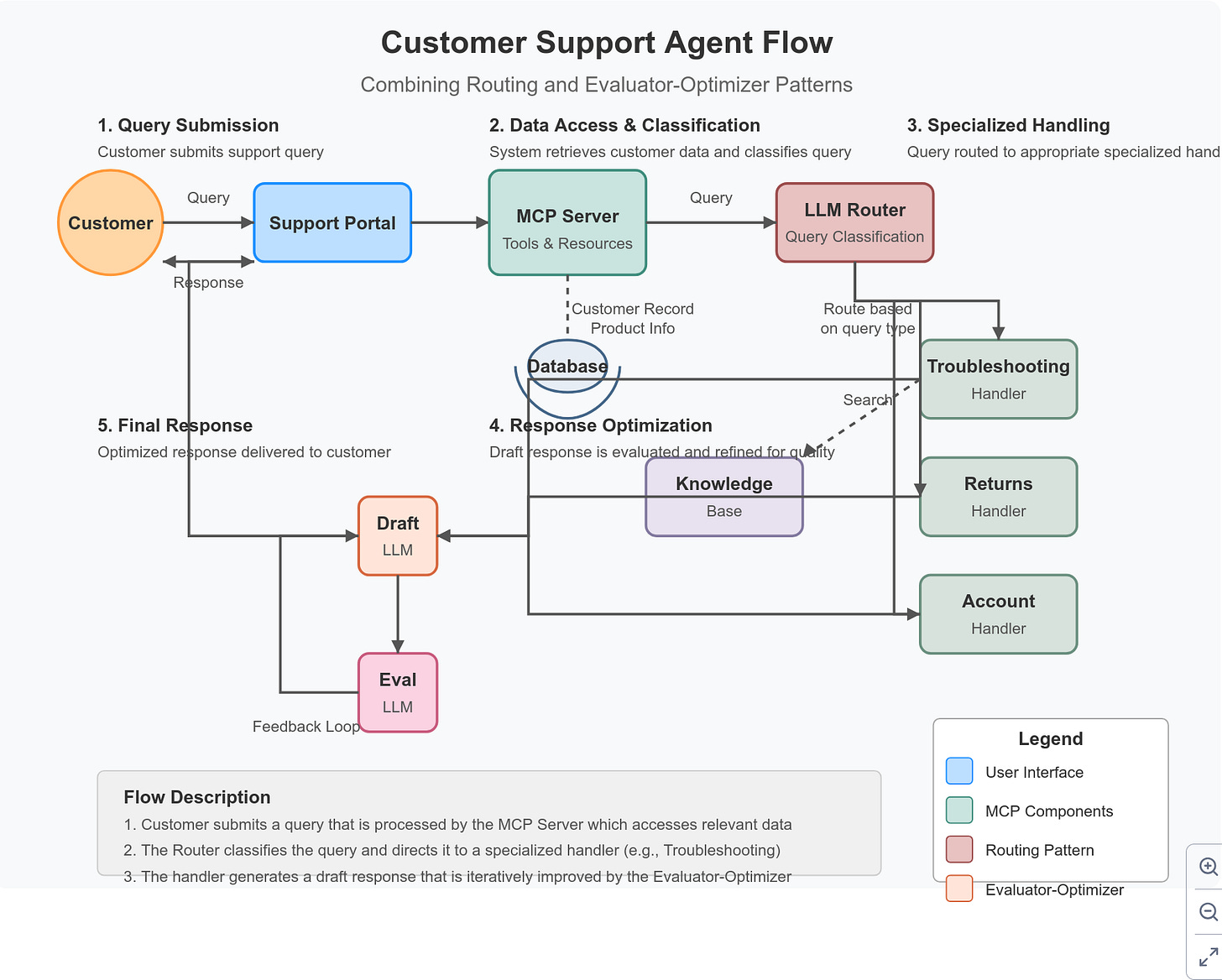

To illustrate how these patterns work together, let's look at a customer support agent that combines the Routing and Evaluator-Optimizer patterns.

In this example:

We use the MCP architecture to separate tools, resources, and prompts

We employ the Routing pattern to direct queries to specialized handlers

We use the Evaluator-Optimizer pattern (not shown in detail) to ensure high-quality responses

All of this happens within a clean, maintainable structure

The result is a customer support agent that can:

Classify and route queries to the appropriate handler

Access customer and product information securely

Search for relevant knowledge base articles

Generate high-quality, personalized responses

Update ticket statuses in the support system

And it does all of this with a clean separation of concerns that makes the system secure, maintainable, and governable.

Benefits and Best Practices

Adopting MCP and agent patterns provides several key benefits:

Enhanced Security: Sensitive data remains within application boundaries

Improved Maintainability: Each component can be updated independently

Better Governance: Clear audit trails of model actions

Scalability: New tools and resources can be added without changing other components

Consistency: Standardized formats for user interactions

When implementing these patterns, follow these best practices:

Start Simple: Begin with the simplest pattern that meets your needs

Design Clear Tool Interfaces: Make tools atomic, well-documented, and focused

Implement Proper Resource Security: Apply authentication, authorization, and data filtering

Craft Effective Prompts: Use consistent formats and clear instructions

Monitor Performance: Track costs, latency, and response quality

Iteratively Improve: Refine your implementation based on real-world usage

Conclusion

The Model Context Protocol and agent workflow patterns represent a significant evolution in how we build applications with LLMs. By moving beyond simple prompting to more structured architectures, we can create AI systems that are more capable, secure, and maintainable.

These patterns aren't just theoretical—they're being used today by teams building production LLM applications across industries. From customer support to content creation, from research assistants to coding agents, the combination of MCP and agent patterns provides a foundation for building the next generation of AI applications.

As you embark on your own journey of building with LLMs, I encourage you to adopt these patterns and adapt them to your specific needs. The future of AI isn't just about bigger models or better prompts—it's about thoughtful architectures that leverage these powerful tools in safe, effective, and maintainable ways.

Inspired by groundbreaking research from Anthropic researchers, including the insights shared in "Building Effective Agents" and "Model Context Protocol" publications. Images and conceptual frameworks are credited to the Anthropic research team.

In the rapidly evolving landscape of artificial intelligence, mastering the Model Context Protocol isn't just about writing code—it's about orchestrating intelligent agents that can transform complex challenges into elegant, adaptive solutions, bridging the gap between human creativity and machine intelligence.